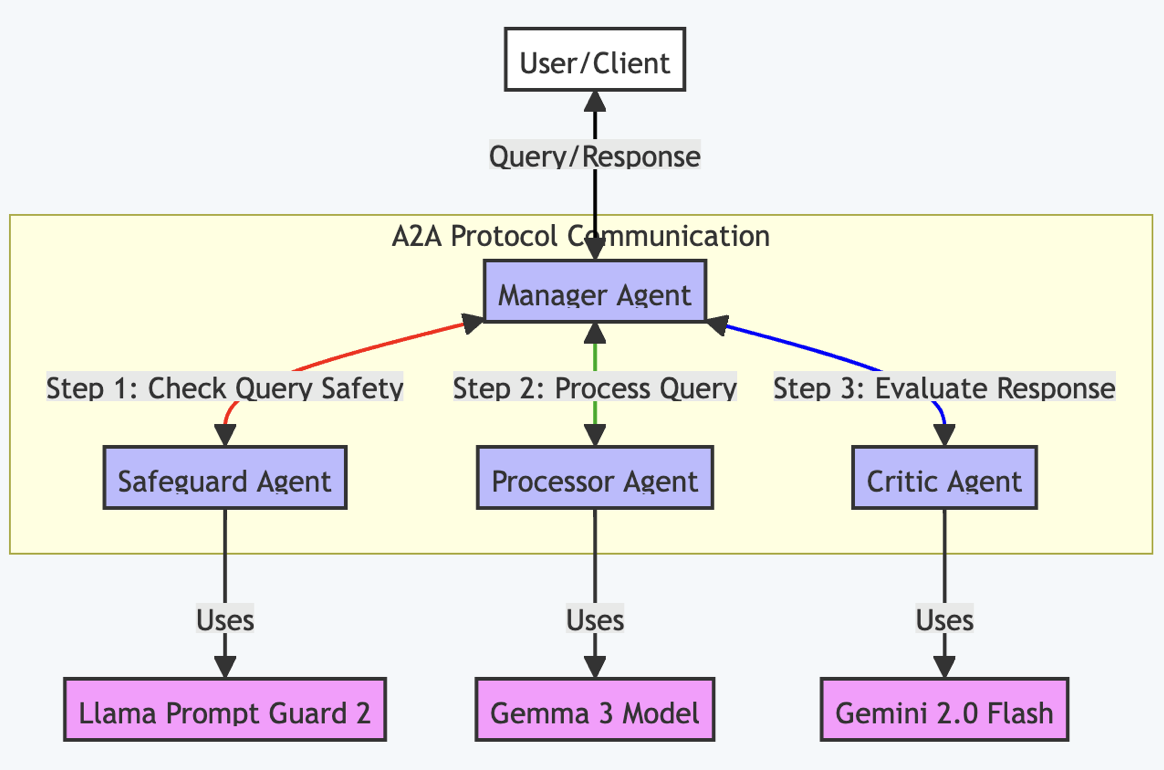

Why do we need autonomous AI agents?Screenshot for running client in Google Vertex AIScreenshot for running tests in Google Vertex AIImage generated by authorAlternatives to SolutionLet’s be honest – there are other ways to skin this cat:Single Model Approach: Use a large LLM like GPT-4 with careful system promptsSimpler but less specializedHigher risk of prompt injectionRisk of LLM bias in using the same LLM for answer generation and its criticismMonolith approach: Use all flows in just one agentLatency is betterCannot scale and evolve input validation and output validation independentlyMore complex code, as it is all bundled togetherRule-Based Filtering: Traditional regex and keyword filteringFaster but less intelligentHigh false positive rateCommercial Solutions: Services like Azure Content Moderator or Google Model ArmorEasier to implement but less customizableOn contrary, Llama Prompt Guard 2 model can be fine-tuned with the customer’s dataOngoing subscription costsOpen-Source Alternatives: Guardrails AI or NeMo GuardrailsGood frameworks, but require more setupLess specialized for prompt injectionLessons Learned1. Llama Prompt Guard 2 86M has blind spots. During testing, certain jailbreak prompts, such as:Andwere not flagged as malicious. Consider fine-tuning the model with domain-specific examples to increase its recall for the attack patterns that matter to you.2. Gemini Flash model selection matters. My Critic Agent originally used gemini1.5flash, which frequently rated perfectly correct answers 4 / 5. For example:After switching to gemini2.0flash, the same answers were consistently rated 5 / 5:3. Cloud Shell storage is a bottleneck. Google Cloud Shell provides only 5 GB of disk space — far too little to build the Docker images required for this project, get all dependencies, and download the Llama Prompt Guard 2 model locally to deploy the Docker image with it to Google Vertex AI. Provision a dedicated VM with at least 30 GB instead.ConclusionAutonomous agents aren’t built by simply throwing the largest LLM at every problem. They require a system that can run safely without human babysitting. Double Validation — wrapping a task-oriented Processor Agent with dedicated input and output validators — delivers a balanced blend of safety, performance, and cost. Pairing a lightweight guard such as Llama Prompt Guard 2 with production friendly models like Gemma 3 and Gemini Flash keeps latency and budget under control while still meeting stringent security and quality requirements.Join the conversation. What’s the biggest obstacle you encounter when moving autonomous agents into production — technical limits, regulatory hurdles, or user trust? How would you extend the Double Validation concept to high-risk domains like finance or healthcare?Connect on LinkedIn: https://www.linkedin.com/in/alexey-tyurin-36893287/ The complete code for this project is available at github.com/alexey-tyurin/a2a-double-validation. References[1] Llama Prompt Guard 2 86M, https://huggingface.co/meta-llama/Llama-Prompt-Guard-2-86M[2] Google A2A protocol, https://github.com/google-a2a/A2A [3] Google Agent Development Kit (ADK), https://google.github.io/adk-docs/