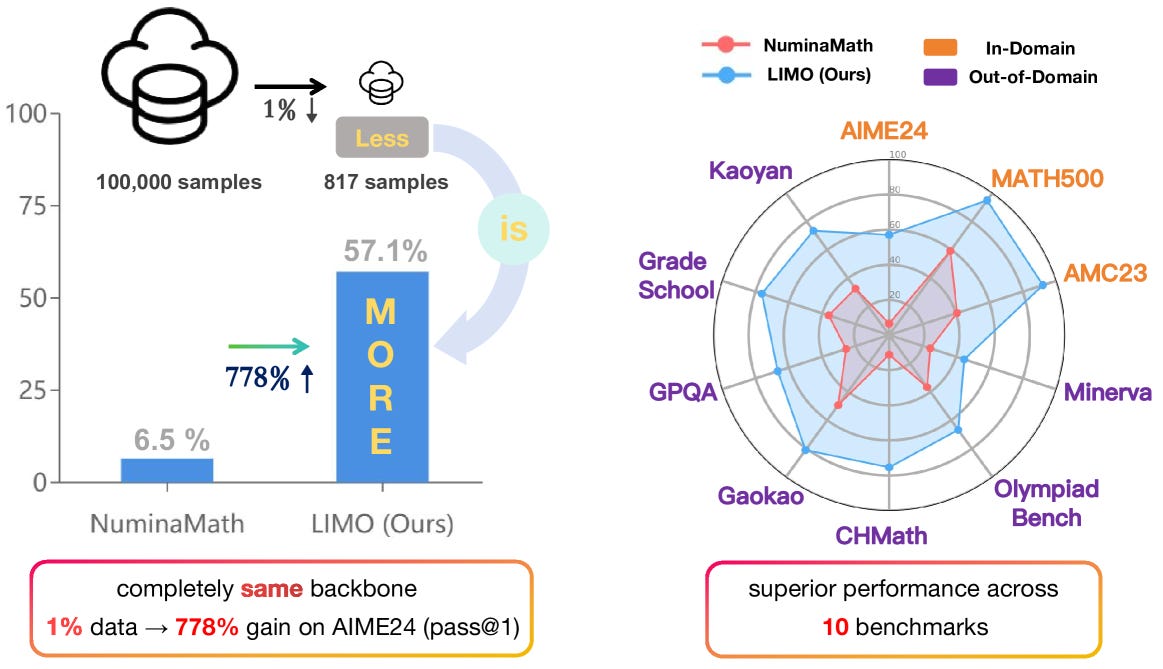

For years, researchers have trained AI systems by hooking them up to massive datasets. The approach works, but it can be expensive and unwieldy. The top paper on AIModels.fyi this week (LIMO: Less Is More for Reasoning) shows a different path. It demonstrates that when two key conditions are met – rich pre-trained knowledge and sufficient computational space for reasoning – a model can achieve exceptional mathematical reasoning with minimal but precisely chosen training examples.

Read more