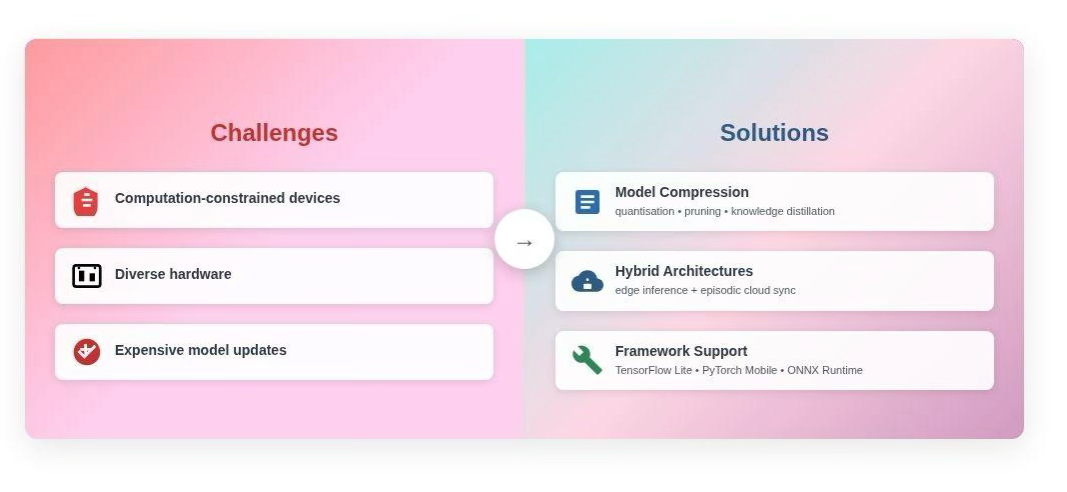

Startups are under constant pressure to ship products that are simple, affordable, and scalable without compromising performance or user trust. For years, Recent advances in specialized hardware have made this approach viable much earlier than expected. Platforms like Apple’s Neural Engine, Google’s Edge TPU, and modern microcontrollers now provide enough on-device compute to support practical inference at scale Why now? The strategic timing for Edge AI adoptionThe timing for edge-first MVPs is especially favorable. Consumer hardware is advancing rapidly, and AI acceleration is now a core feature rather than a niche capability. Apple, Qualcomm, and Intel have all introduced dedicated neural processing units in recent product roadmaps, designed to support fast, energy-efficient on-device inference and lower the barrier to edge deployment.At the same time, development tooling has matured. Frameworks such as TensorFlow Lite, PyTorch Mobile, and ONNX Runtime reduce friction in deploying and maintaining models across diverse devices. What once required highly specialized teams can now be managed by focused startup engineering groups, which matches the profile of early-stage companies.Challenges and how start-ups can overcome themChallenges and solutions can include anything from diverse hardware to framework support.Edge AI does come with constraints. Limited compute capacity, device fragmentation, and model update complexity are common concerns. However, these challenges are increasingly well understood and manageable.Model compression techniques, such as quantization, pruning, and knowledge distillation, allow models to run efficiently on constrained hardware without unacceptable accuracy loss.Hybrid architectures, combining on-device inference with periodic cloud synchronization, provide a practical balance between performance and flexibility.💡Improved framework support continues to abstract many device-specific deployment issues, lowering operational burden.When applied thoughtfully, these approaches allow startups to benefit from edge deployment without accumulating long-term technical debt.Edge AI and the new MVP playbookTraditional MVPs often prioritized speed to market through cloud-based services. While fast to deploy, those systems could be expensive to scale and vulnerable to latency or connectivity issues. Edge AI changes that equation.By shifting inference onto the device, startups can build MVPs that:Operate reliably in low-connectivity environments, opening access to underserved marketsProtect user data by default, strengthening trust in regulated sectorsDeliver the responsiveness required for AR/VR, robotics, and wearable technologiesFor example, a remote patient monitoring system deployed in rural areas can trigger alerts instantly when anomalous vital signs are detected, even without reliable internet access. Agricultural sensors far from central infrastructure can optimize irrigation in real time. These are practical advantages available today, not speculative scenarios.Looking ahead: The Edge as the new cloudIndustry forecasts point to continued decentralization of data processing. Gartner predicts that by 2025, 75% of enterprise-generated data will be processed outside traditional centralized data centers (Gartner, 2021). This represents a structural shift in how intelligent systems are delivered, not just a marginal optimization.For startups, the takeaway is clear: Edge AI is not only a cost-saving measure; it is a strategic design choice. Teams that adopt edge-first architectures early can differentiate on performance, privacy, and user experience, factors that increasingly determine whether an MVP gains traction or stalls.As hardware improves and development tools become more accessible, edge computing is likely to become the default foundation for intelligent products rather than the exception.

Edge AI for start-ups: Why on-device intelligence is the future of MVPs

Related Posts

Efinix Promotes Tony Ngai to Co-President and Chief Technology Officer

FPGA Industry Veteran and Inventor of Quantum® FPGA Architecture to Lead Engineering Expansion as Company Scales for Next Decade of Growth Efinix®, Inc., the FPGA pioneer accelerating edge AI innovation, today announced the promotion of Tony Ngai to Co-President and Chief Technology Officer. In his expanded role, Ngai will drive...

The post Efinix Promotes Tony Ngai to Co-President and Chief Technology Officer first appeared on AI-Tech Park.

Vonage, C3 AI Launch Agentic Field Service AI

Designed for mission-critical field operations, the joint solution combines autonomous and assisted AI with Vonage communications and network APIs for those working beyond the enterprise edge Vonage, part of Ericsson (NASDAQ: ERIC), today announced a strategic collaboration with C3 AI (NYSE: AI), a leading Enterprise AI application software provider, to launch C3 AI Field...

The post Vonage, C3 AI Launch Agentic Field Service AI first appeared on AI-Tech Park.

Adastra Enters AWS Partner Greenfield Program

Multi-year collaboration with AWS will help organizations not yet on AWS migrate and modernize, establish secure cloud foundations, and scale responsible Generative AI with funding and enablement Adastra, a global leader in AI and data-driven transformation, today announced that they will participate in the Amazon Web Services (AWS) Partner Greenfield...

The post Adastra Enters AWS Partner Greenfield Program first appeared on AI-Tech Park.